Neural network is a special component of the continuously evolving AI landscape. The functionalities of neural networks have empowered AI systems to identify and process data like the human brain. The anatomy of neural network has been tailored for machine learning and deep learning in a structure that is similar to the human. It helps in enhancing the learning capabilities of computers so that they can learn from mistakes and achieve continuous improvements.

Artificial neural networks use interconnected nodes as the counterparts of neurons in the human brain. The nodes are arranged in different layers in neural networks with each layer having a distinct function. The use of neural networks in solving complicated problems, such as face recognition or document summarization, has sparked curiosity about neural networks. Let us learn more about the structure of neural networks to develop a better understanding of their working mechanisms.

Take the best and most comprehensive AI Certification Course that will boost your AI skills beyond expectations. Enroll now!

Understanding the Basics of Neural Networks

The definition of neural networks can serve as your first point of introduction to their architecture. Most of the answers to queries like ‘What is the structure of the neural network?’ point towards the similarities between neural networks and the human brains. The structure of neural networks has been modeled according to the biological neural networks found in our brains.

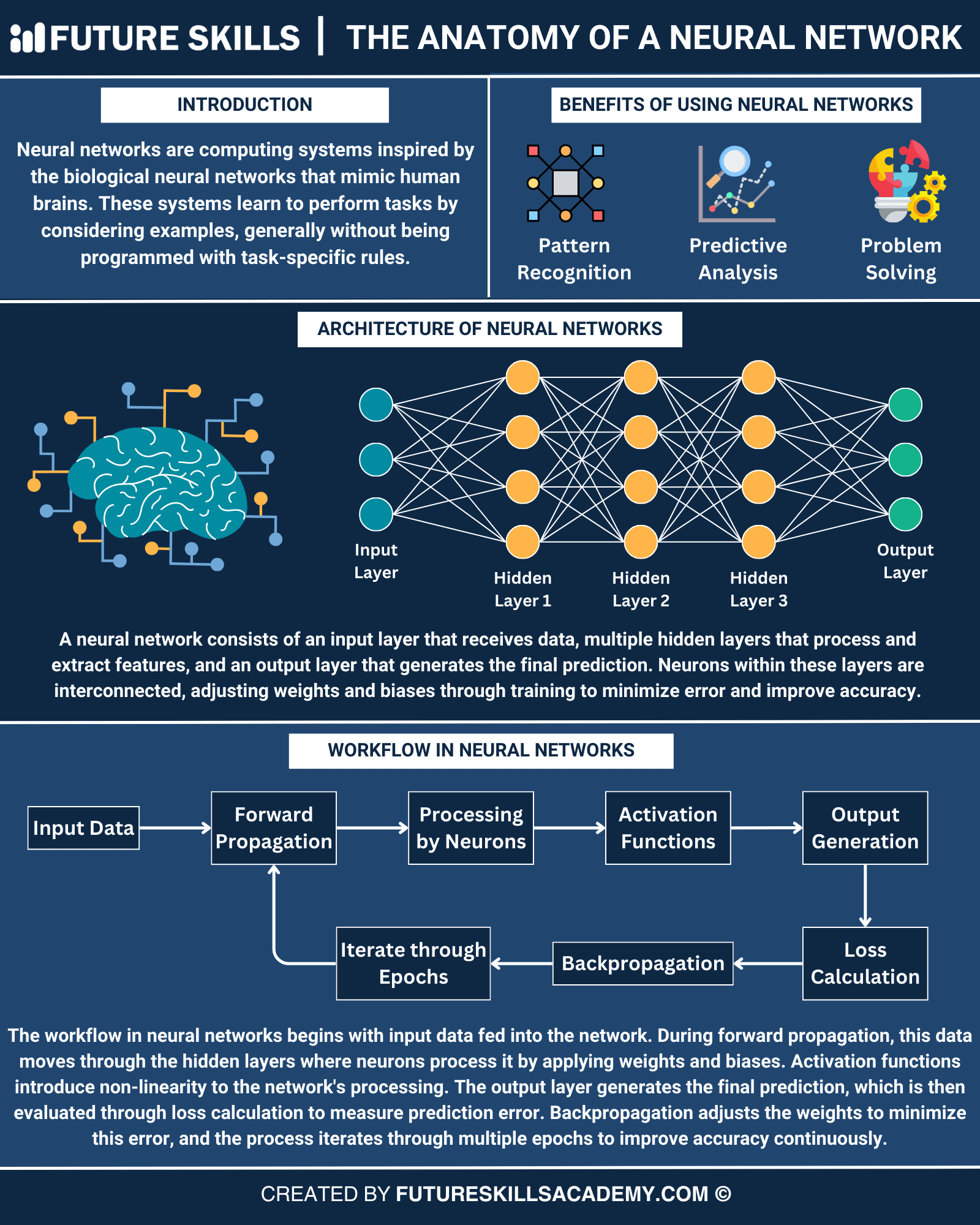

Neural networks have the potential to elevate the AI ecosystem to higher standards. The networks can learn how to perform certain tasks by using examples or experiences from different tasks like humans. The unique structure of neural networks ensures that you don’t have to rely on programming task-specific rules in the networks.

Unraveling the Benefits of Neural Networks

The structure of neural networks opens up the possibilities for a wide range of advantages. Neural networks empower computers to think like humans, thereby making them capable of doing tasks that require cognitive abilities. The most notable neural network benefits include pattern recognition, problem solving and predictive analytics. These benefits of neural networks ensure that they can enhance the capabilities of artificial intelligence systems without extensive programming.

Neural networks can detect intricate patterns in massive datasets by learning from examples to extract valuable insights. The ability of neural networks to function like the human brain also enables easier predictive analytics for a broad range of tasks. You can also rely on neural networks for problem-solving with the help of experiences from previous tasks.

-

Pattern Recognition

Pattern recognition is one of the most clearly visible use cases of neural networks. Neural networks are capable of pattern identification and learning from massive datasets to support sophisticated data interpretation mechanisms. You can take a neural network example application in medical imaging to identify their pattern recognition capabilities. Neural networks can support medical imaging applications by recognizing patterns in MRI scans and X-rays for effective diagnosis.

-

Predictive Analytics

Neural networks can support predictive analytics for modeling complex relationships in diverse datasets. The pattern recognition capabilities of neural networks replicate brain functions and can forecast future trends and patterns. The hidden layers in the structure of neural networks help in making more accurate predictions.

-

Problem Solving

The architecture of neural network follows the same structure as the human brain and ensures that they learn from experience. The experience helps neural networks solve problems in scenarios where traditional methods fail. Neural networks can draw insights from non-linear and complex data to solve problems. You can use neural networks to solve problems that require pattern recognition, prediction, filtering, classification, or optimization.

Breaking Down the Structure of Neural Networks

The structure of neural networks is responsible for the wide range of benefits they have to offer in the AI landscape. You might have some doubts about how neural networks can function like the human brain. The architecture underlying the design of neural networks provides some plausible answers for your doubts.

You can develop a better understanding of the working of neural network by learning about their architecture. The primary components of neural networks are the three layers, input layer, hidden layer, and output layer. Neural networks include multiple hidden layers, depending on their application. The nodes in all layers of a neural network are connected to each other and have unique weights and biases. Let us dive deeper into the layers of neural networks to know more about their working mechanisms.

-

Input Layer

The first layer of nodes in any neural network is the input layer, which helps in taking input data into the network. The nodes or neurons in the input layer represent distinct features of the dataset. You can think of a neural network example of a real estate price prediction model to understand the functions of input layers. The neurons in the input layer for the model would represent the size of the property and distance from public utilities such as schools or hospitals.

-

Output Layer

The output layer is responsible for generating the final predictions of the neural network. Output layers have neurons that represent the different outputs you want from a specific input. You will find one neuron in the output layer in applications where you expect a single output. On the other hand, tasks that can generate multiple outputs would have multiple neurons in the output layer.

-

Hidden Layers

The hidden layers in a neural network are the ideal destinations where you would find all the magic. You can find multiple hidden layers in the anatomy of neural network according to the complexity of the problem under concern. The hidden layer helps in transformation of the raw input data to abstract representations that the network could understand. Every node in the hidden layers receives input from nodes in previous layers and performs computations before transferring it to the next layer.

-

Weights and Bias

The neurons or nodes in the layers of a neural network are connected to each other. The neurons can adjust the weights and biases through the training process for minimizing errors and improving accuracy. You must note that weights and biases are an important component in the working mechanisms of neural networks.

Neurons are connected to each other through weights that help in modulation of the direction and strength of signals. Weights help in downplaying or emphasizing certain parameters to achieve the desired output. On the other hand, biases are useful for shifting the output function to a specific side of the activation function. Biases help neural networks react in the right manner to different input conditions.

-

Activation Functions

The answers to questions like “What is the structure of the neural network?” also draw the limelight towards activation functions. Activation functions are used for the output signal of nodes in neural networks and help in deciding whether you should activate them or not. Some of the general activation functions include sigmoid function and the Rectified Linear Unit or ReLU function. Each activation function has a unique contribution to neural networks which are useful for diverse applications.

Learn how to initialize weights in Neural Networks and how it can improve the accuracy of neural networks through different techniques.

Exploring the Workflow of Neural Networks

The components in neural network architecture offer a clear impression of how neural networks are similar to the human brain. It is reasonable to have doubts about the working of neural network with the help of their layers and components. The workflow in neural networks can help you understand the purpose of the components in drawing desired results from them.

The working mechanism of neural networks starts when you feed input data into the network. In the next step, the neural network passes the input data through the hidden layers, which is known as forward propagation. The neurons in the hidden layers process the input data by using different weights and biases. Activation functions play a vital role in the functioning of neural networks by introducing non-linearity in the processing mechanism of the network.

The output layer helps in generating the final prediction or output from the processed data. You would find an extension to the architecture of neural network after the output generation as the output prediction moves to the loss calculation stage. During loss calculation, the network would measure the prediction error. Subsequently, neural networks would use backpropagation for adjusting the weights to reduce errors. The process would be iterated through multiple epochs to ensure continuous improvement in accuracy of the output.

Final Thoughts

The introduction to structure of neural networks provides a glimpse of the important components that drive their functionalities. You can establish a clear relationship between neural network benefits and their anatomy. For example, the number of hidden layers in neural networks depends on the complexity of the problem you want to solve. In-depth understanding of the structure of neural networks can help you understand their role in pushing the AI revolution forward.

The most crucial element in the structure of neural networks is the layered structure. In addition, you would find components such as weights, biases, and activation functions playing a crucial role in the working mechanisms of neural networks. The comprehensive range of functionalities expected from neural networks depends on the effective optimization of their structure. Learn more about neural networks and their use cases with credible resources right now.