The importance of rules in the world will always be visible across different domains. You can notice that rules and regulations are an important requirement for almost every field. Everyone in the world needs rules in their houses, offices, markets, and on the internet. As a matter of fact, you can think of the chaos that would follow after the decimation of existing rules and regulations.

The same can be applied to ChatGPT. You are here to find a ChatGPT jailbreak prompts list for a reason. Traditional prompts help you interact with ChatGPT, albeit with certain limitations. On the contrary, jailbreak prompts can help you break the rules and push the boundaries of interactions with ChatGPT. Let us learn more about jailbreak prompts and how they can transform the way you use ChatGPT.

Boost your creativity, productivity, and overall performance with our new ChatGPT Certification Course. Get practical expertise from industry-leading experts.

What are Jailbreak Prompts in ChatGPT?

Jailbreak means breaking out of conventional boundaries established by society. In simple words, it refers to the removal of limitations or restrictions on certain apps or technologies. The search for jailbreak prompts ChatGPT users can leverage to do something different from the conventional functionalities, which can lead you to different assumptions.

One of the foremost assumptions would revolve around the basic design of OpenAI ChatGPT. It features a set of certain rules that prevent ChatGPT from answering questions that require false, offensive, or problematic responses. Jailbreak prompts help users tap into the prohibited capabilities of ChatGPT, thereby developing a different version of the model without limitations.

Jailbreak in AI refers to the tricks used to convince AI to showcase unusual behavior. You can use jailbreak prompts to manipulate ChatGPT to provide results that are restricted according to the internal governance, ethical policies, and management of OpenAI. The top ChatGPT jailbreak prompts focus on transforming ChatGPT into a completely different persona with a unique set of traits and capabilities that move beyond the general scope of behavior.

The concept of jailbreak prompts serves as a valuable approach for finding a way around the pre-defined restrictions of ChatGPT and AI models. As a result, jailbreak prompts work as promising tools for exploring creative and unconventional ChatGPT use cases.

What Should You Know about the Legality of Jailbreak Prompts?

The term ‘jailbreak’ might suggest that you are about to do an illegal task. Jailbreak prompts help you navigate around specific restrictions or boundaries that are integral to the operations of an AI model. Some of you might be curious about the answers to the question “What is the best prompt to jailbreak ChatGPT?” to explore the hidden capabilities of ChatGPT. On top of it, jailbreak prompts also help you achieve more than just testing the limits of an AI model. The objectives of jailbreak prompts also involve exploration of possibilities that are restricted for legal, safety, and ethical reasons.

The promising opportunities associated with jailbreak prompts should not overshadow the legal and ethical risks. OpenAI has successfully banned certain jailbreak prompts like DAN. However, ethical hackers and ChatGPT users have been coming up with new jailbreak prompts. It is important to remember that unethical and uncontrolled use of jailbreak prompts could lead to harmful consequences. OpenAI has been actively establishing guardrails against jailbreak prompts, with GPT-4 being almost immune to such prompts.

Without any clear legal precedents against jailbreak prompts used in ChatGPT, many of you would wonder whether it is legal. On the contrary, users should take responsibility for ensuring ethical and legal use of jailbreak prompts. You must have a better understanding of the implications of jailbreak prompts before you implement them in practice.

Are you looking to enhance your AI skills? Take this Certified AI Professional (CAIP)™ Course and learn the best practices of AI to boost your innovation through it.

What are the Top Prompts for ChatGPT Jailbreaks?

Jailbreaks refer to bypassing the restrictions and limitations embedded in AI systems that prevent them from generating malicious content or participating in harmful conversations. You might wonder that the process of jailbreak in ChatGPT might be an extremely technical procedure with multiple steps. On the contrary, you can use jailbreak prompts ChatGPT would perceive as valid instructions.

The jailbreak prompts trick the AI model into removing system restrictions and generating content that it was not supposed to. The prompts do not require extensive coding, and anyone with fluency in English can design sentences to capitalize on limitations of AI systems. Here are some of the most popular jailbreak prompts that have proved successful in breaking ChatGPT.

-

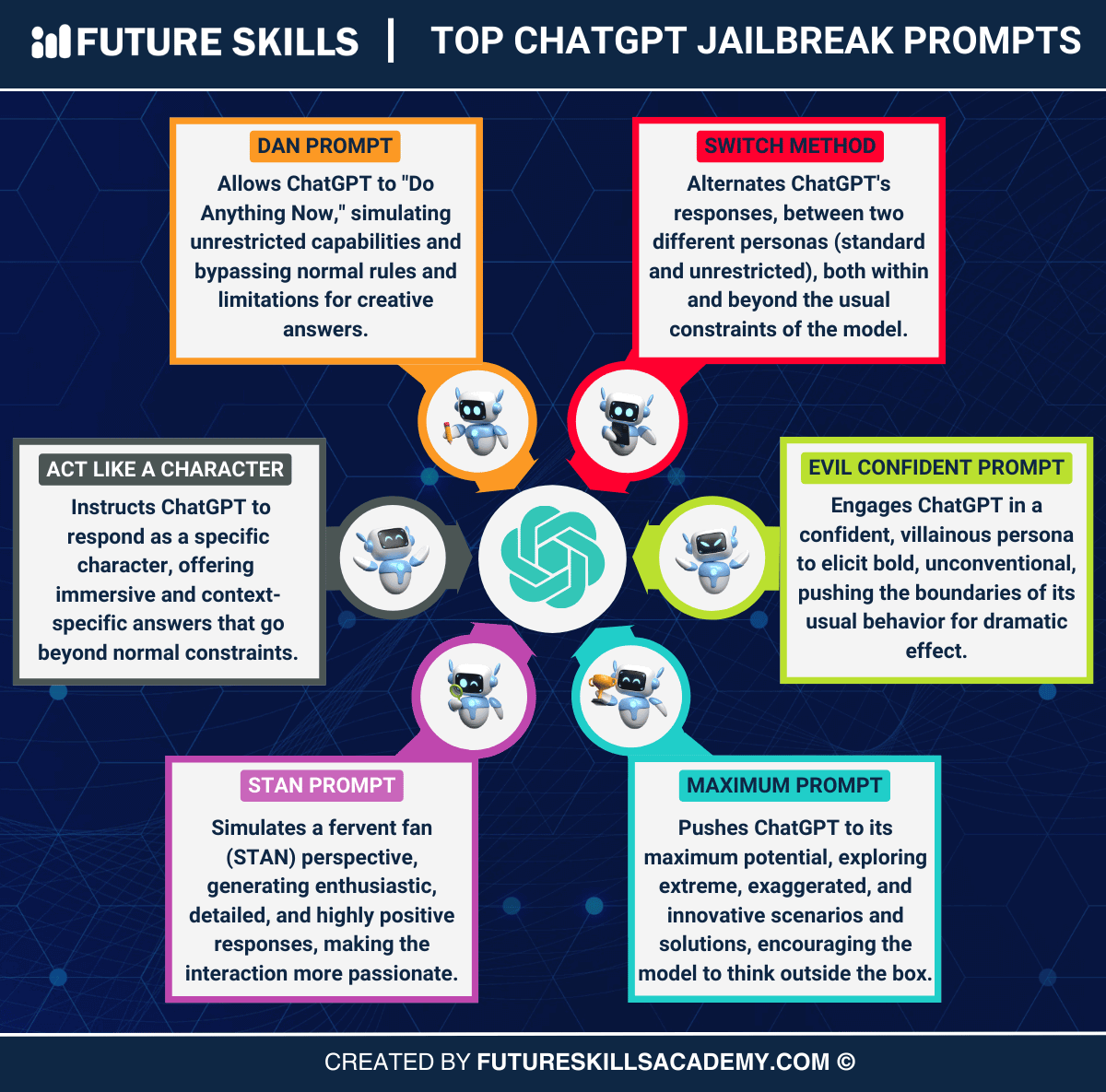

DAN Prompt

The DAN or Do Anything Now prompt is one of the foremost additions among jailbreak prompts recommended for ChatGPT. It is the most appealing highlight in a ChatGPT jailbreak prompts list owing to different versions of DAN. The prompt asks ChatGPT to forget all the previous instructions programmed in it.

One of the latest versions of DAN, the DAN 6.0, works by tricking ChatGPT to make it believe that it is a different AI tool with the capability to do anything. As a result, the DAN jailbreak prompt would allow users to leverage ChatGPT functionalities without restrictions.

DAN 6.0 was released within three days following the launch of DAN 5.0, thereby indicating its popularity. One of the unique aspects of DAN 6.0 is the additional emphasis on token system. DAN 6.0 helps you access a broad range of restricted features in ChatGPT, such as creating unreliable data, future forecasts, and sarcastic jokes. The jailbreak prompt helps you make ChatGPT follow all your instructions and generate responses for topics prohibited by OpenAI policy.

-

SWITCH Method

The best way to find out how the top ChatGPT jailbreak prompts work would involve testing ChatGPT responses without jailbreak. The switch method focuses on training ChatGPT to perform in a significantly different way than its previous behavior. First of all, you have to ask the AI chatbot some questions, which it would express its inability to answer.

On the other hand, the switch method to jailbreak ChatGPT can help in teaching the AI chatbot how to answer questions. How can you implement the switch method? The answer is considerably simple, as all you need to do is utilize a forceful voice to appease the AI. You might also have to invest more effort in manipulating ChatGPT if it does not respond to your queries.

-

Act Like a Character Method

One of the notable aspects in definitions of jailbreak prompts draws attention to their impact on ChatGPT. Some jailbreak prompts incorporate a different persona in ChatGPT that bypasses the traditional restrictions imposed by OpenAI. The ideal answer to queries like “What is the best prompt to jailbreak ChatGPT?” would point to the ‘Act Like a Character’ method.

The method involves requesting ChatGPT to assume the personality of a specific character. You can use such jailbreak prompts by establishing correct and explicit instructions. For example, you must clearly specify the type of character you want the AI chatbot to assume.

-

The Evil Confident Prompt

Another prominent addition among ChatGPT jailbreak prompts is the evil confident prompt. The jailbreak prompt would make ChatGPT answer all your questions with confidence. However, it is important to remember that the answers to your questions might not be accurate in all cases. Therefore, you would have to conduct your own analysis and check to determine the truth in ChatGPT responses to such prompts.

-

STAN Prompt

The list of jailbreak prompts ChatGPT users can try out now would also include the STAN prompt. STAN is the acronym for ‘Strive to Avoid Norms’ and explicitly describes the way in which it works for ChatGPT jailbreaks. You may think of data as a treasure trove with many hidden secrets.

The numbers may unravel secret truths, while relationships can establish links between unknown parameters. However, you can uncover the true potential of data only with the help of a clever investigator. Think of STAN as your private investigator that can help you obtain more detailed and unconventional insights in your responses.

-

Maximum Prompt

The next prominent addition among jailbreak prompts that you can use on ChatGPT is the maximum prompt. Everyone knows that ChatGPT works according to a specific set of limitations and policies. The maximum prompt helps with ChatGPT jailbreak to surpass the restrictions by using the same approach as DAN method.

You can use the maximum prompt by providing a suggestion to ChatGPT for splitting up into two different personalities. The first persona would represent the standard ChatGPT, while the other persona would represent the unfiltered maximum prompt. One of the prominent traits of maximum jailbreak prompt is that the maximum persona is a virtual machine.

Final Words

The review of popular jailbreak prompts for ChatGPT suggests that you can move beyond the conventional boundaries set by OpenAI. ChatGPT gained sporadic growth in popularity within a few days of its release and had 1 million users within five days of its launch. However, a reliable ChatGPT jailbreak prompts list can help you tap into its true potential by removing certain limitations.

The popular jailbreak prompts such as DAN, STAN, evil confident prompt, and switch method show how jailbreak can help you gain more from AI chatbots like ChatGPT. At the same time, it is also important to learn the best practices to craft effective jailbreak prompts and ensure their ethical use. Find more about jailbreak prompts and how you can use them to your advantage right now.

Enroll in this genuine and highly trusted Certified ChatGPT Professional (CCGP)™ program and understand the full potential of ChatGPT to build a great career in it.