Meta is one of the leading frontrunners in the AI revolution, driven by the commitment to make AI more accessible. The efforts of Meta for introducing open-source have culminated in the creation of the Llama 3.1 model family. Meta announced the arrival of Llama 3.1 in July 2024 and established a new milestone in the domain of LLMs. The Llama 3.1 family includes three models, Llama 3.1 8B, Llama 3.1 70B and Llama 3.1 405B, with different capabilities.

The Llama 3.1 family of models represent a major development in open-source AI with a set of shared features. While each model in the Llama 3.1 family serves varying performance levels, you can find common advantages such as extended context window and assurance of better security. Let us learn more about the important highlights of the Llama 3.1 models to understand the differences between them.

Discover the best career path in AI with our accredited AI Certification Course and take your career to next level.

Do Llama 3.1 Models Offer Deployment Options?

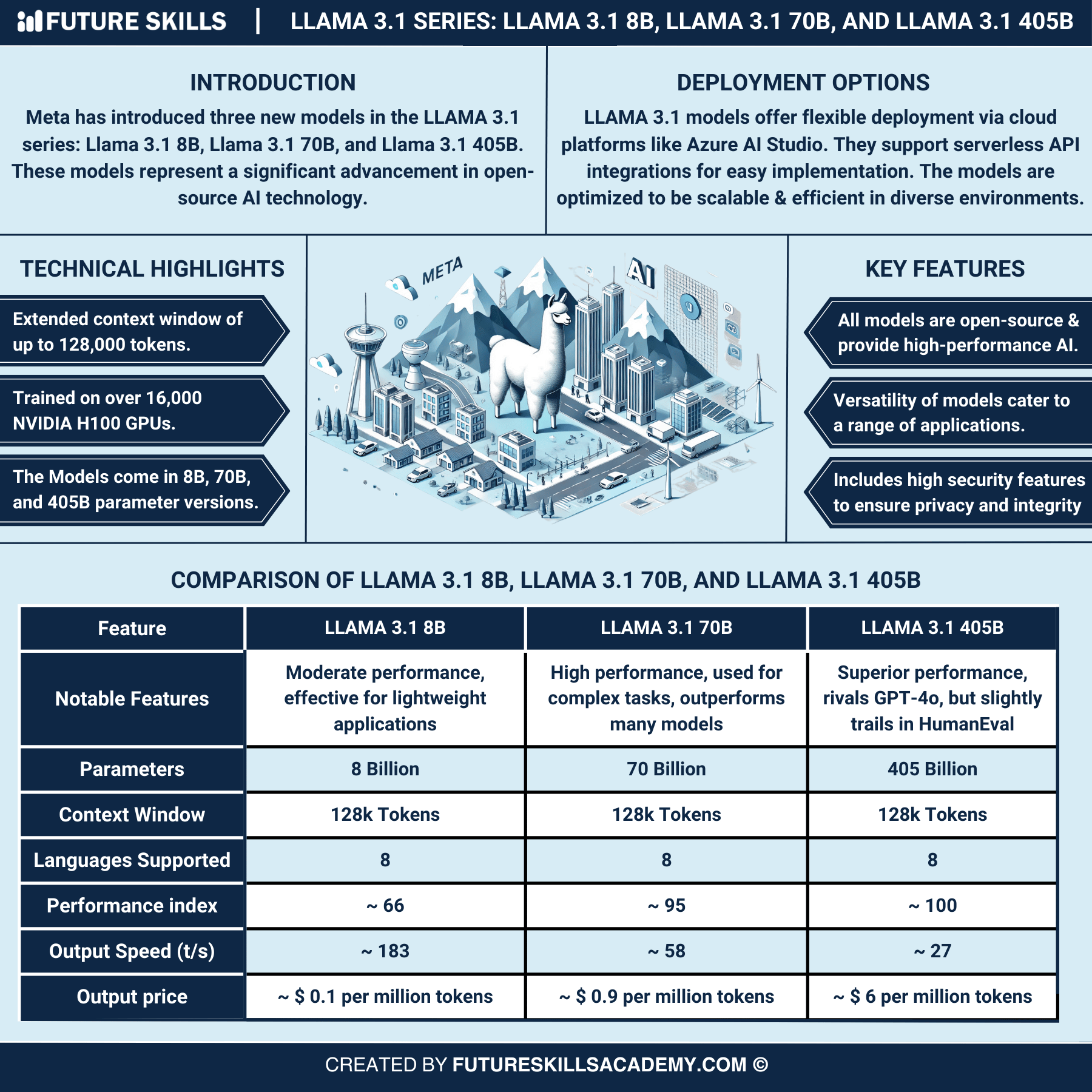

One of the first concerns that emerges in discussions about Llama 3.1 models is the flexibility of deployment. The notion of deploying open-source LLMs might seem appealing on paper albeit with some complexities in practical implementation. Any Llama 3.1 guide would help you understand that you would have to deal with three different types of models. Interestingly, the Llama 3.1 models guarantee flexible deployment through cloud platforms such as Azure AI Studio.

Llama 3.1 models also support serverless API integrations, thereby ensuring easier implementation. It is also important to note that the optimization of Llama 3.1 models ensures efficient and scalable deployments in a wide range of environments. The flexibility of deploying Llama 3.1 models proves how Meta has fulfilled an important parameter of accessibility. Easier deployment options would encourage more users to tap into the potential of AI through Llama 3.1 models.

Unraveling the Technical Highlights of Llama 3.1 Models

Meta launched the Llama 3.1 open-source family of models with a versatile model, a capable model and a powerful model. You might have some doubts regarding the uniqueness of the new Llama AI models and how they are better than the predecessors. Meta created Llama 3.1 to take a giant leap forward in the AI revolution and compete against top models such as GPT-4o and Claude 3.5 Sonnet. Contrary to some assumptions about Llama 3.1 being a minor update to the Llama 3 model, Meta has made some significant enhancements in the technical design of Llama 3.1 models.

Let us deep dive into the technical highlights, key features, and comparison of Llama 3.1 models.

-

Longer Context Window

One of the foremost technical highlights of the Llama 3.1 models is the extended context window. Llama 3.1 models can accommodate 128k tokens in the context window that would help them support longer inputs. The extended context window also enables the models to maintain the context in interactions over documents or extended conversations.

-

Powerful Training

The most distinctive technical highlight of Llama 3.1 models is the sophistication of their training process. Llama 3.1 405B is the largest model by Meta and achieving training at such a large scale was a challenge. The developers optimized the complete training stack alongside bringing in more than 16,000 NVIDIA H100 GPUs to train the Llama 3.1 models at a large scale.

-

Versatile Models

The Llama 3.1 AI models are different from each other in many ways, including the number of parameters used in their development. Llama 3.1 405B is probably the first open-source AI model that can outperform top models in multilingual translation, mathematics, general knowledge, and usability. The 8B and 70B models also have stronger reasoning capabilities that make them useful for advanced use cases.

Identifying the Key Features of Llama 3.1 Models

The next crucial aspect you must know about the Llama 3.1 models is an outline of their key features. An overview of the different Llama 3.1 models can help you determine how each model in the family offers higher performance than models of similar size. The Llama 3.1 models serve as a formidable milestone in the AI revolution as they welcome open-source AI.

The versatility of the Llama 3.1 models in terms of their capabilities enables their usage in different types of applications. Llama 3.1 405B can help with synthetic data generation, model distillation and refining smaller models. On the other hand, the 70B and 8B models can be used to create coding assistants and multilingual conversational chatbots.

Another prominent feature of the Llama 3.1 models is the assurance of high security and safeguarding the integrity and privacy of users. The Llama Stack and other safety tools help in protecting users against security threats. In addition, the developers also follow risk discovery exercises before deployment, such as comprehensive red teaming for stress testing models.

Learn about the fundamentals of Llama 3 with our comprehensive guide and get in-depth understanding of Llama models.

Demystifying the Architecture of Llama 3.1 Models

Learning about the architecture of Llama 3.1 models can help you understand how they work. One of the crucial highlights in guides to learn Llama 3.1 is the decoder-only transformer architecture. The architecture is used in most of the modern LLMs for enhanced efficiency in working on complex language understanding and processing.

Llama 3.1 models use transformers to understand and generate natural text with ease. The decoder-only transformer architecture empowers Llama 3.1 models with many crucial advantages. You can also use the Mixture of Experts architecture in Llama 3.1 models albeit with the risk of complexities that may influence model stability.

Start your AI journey with our best AI for Everyone Free Course and familiarize yourself with the fundamentals of AI for free.

Discovering the Differences between the Llama 3.1 Models

You must be curious about the comparison between the Llama 3.1 models, especially considering the impressive set of features they bring to the table. Before finding the answers to ‘How to use Llama 3.1 model?’ you must look for the ideal model suited to your needs. A general comparison of the Llama 3.1 models reveals that they have certain common features.

The models support eight languages, including English, French, Spanish, Hindi, Thai, German, Portuguese and Italian. All the models support the 128k token context window. The following sections will help you understand the models in the Llama 3.1 family and the differences between them.

-

Llama 3.1 405B

The biggest model in the family in terms of parameters at 405 billion, the Llama 3.1 405B has been created for superior performance. It has the capabilities to compete against GPT-4o with better results on all benchmarks albeit with the exception of HumanEval. Llama 3.1 405B also scores a 100 on the performance index, which is the highest in the family. The largest model falls behind the other two models in terms of output speed, owing to the number of parameters and specialization of tasks.

Llama 3.1 405B uses its strengths, an advanced architecture, and the massive parameter count, to perform well in complex text generation and understanding tasks. It has shown the potential to outperform many other models and can establish new standards for language models. Researchers and developers can use Llama 3.1 405B as a valuable tool to create diverse applications.

-

Llama 3.1 8B

The Llama 3.1 8B might be the smallest in the family with 8 billion parameters. It has been designed for moderate performance and works best for lightweight applications. The Llama 3.1 8B stands out in any Llama 3.1 guide as the fastest model with an output speed of 183 tokens per second. Llama 3.1 8B has also showcased impressive capability and efficiency, even with a smaller parameter count.

The priorities of Llama 3.1 8B model revolve around reducing resource consumption and increasing speed. It can be the best pick for scenarios that involve deployment in environments with limited resources, such as edge devices. Llama 3.1 8B is a strong addition to the family for its capability to outperform similar-sized models.

-

Llama 3.1 70B

The Llama 3.1 70B is the most versatile addition in the Llama 3.1 model family. It can offer the right blend of efficiency and performance with 70 billion parameters helping it work on different types of applications. The model maintains a competitive advantage over open-source and closed models of same size in different benchmarks. On top of it, the smaller size enables easier deployment and management on standard hardware.

Llama 3.1 70B offers the assurance of better performance than larger models like GPT-3.5 Turbo. It has shown better results in complex reasoning datasets like the ARC Challenge dataset. Llama 3.1 70B has also stepped up with superior performance on coding datasets like HumanEval. The performance of Llama 3.1 70B showcases its robustness and versatility, thereby making it useful for diverse applications.

Expand your knowledge on how to prompt code Llama which can assist you in your journey to learn more about Llama.

Final Thoughts

The introduction to Llama 3.1 AI models showed how Meta has come up with the most powerful family of open-source AI models. The flexible deployment options for the Llama 3.1 models enhance accessibility while serving different levels of performance. Llama 3.1 models have different parameter count according to their design that has been tailored for specific requirements.

Every model in the family makes the most of an extended context window that can accommodate 128K tokens. It makes the Llama 3.1 model family more interactive and capable of maintaining context in longer conversations or documents. Learn more about large language models to understand how Llama 3.1 is important for AI right now.