Generative AI is a new type of AI that can generate new content by using its training data. The growing interest to learn generative AI revolves around its ability to generate audio, text, video, and images just like humans. As a matter of fact, generative AI caught the attention of the whole world with the arrival of ChatGPT in 2022.

According to a report published by McKinsey in June 2023, generative AI can contribute almost $6.1 to $7.9 trillion to the global economy by improving worker productivity. At the same time, generative AI also presents possibilities for potential business risks. Despite its capability to enhance productivity, generative AI can incur privacy violations, exposure of intellectual property, and inaccurate responses.

Generative AI also presents some ethical concerns. For example, the productivity enhancements by generative AI can displace many people from their existing jobs. As a result, workers have to familiarize themselves with generative AI working mechanisms and retrain for new responsibilities.

At the same time, governments, policymakers, and technology executives around the world have been advocating for faster implementation of AI regulations. As generative AI gains popularity, it is important to understand its purpose and working mechanisms more. In this post, we will learn more about generative AI and how it works.

Level up your AI skills and embark on a journey to build a successful career in AI with our Certified AI Professional (CAIP)™ program.

Definition of Generative AI

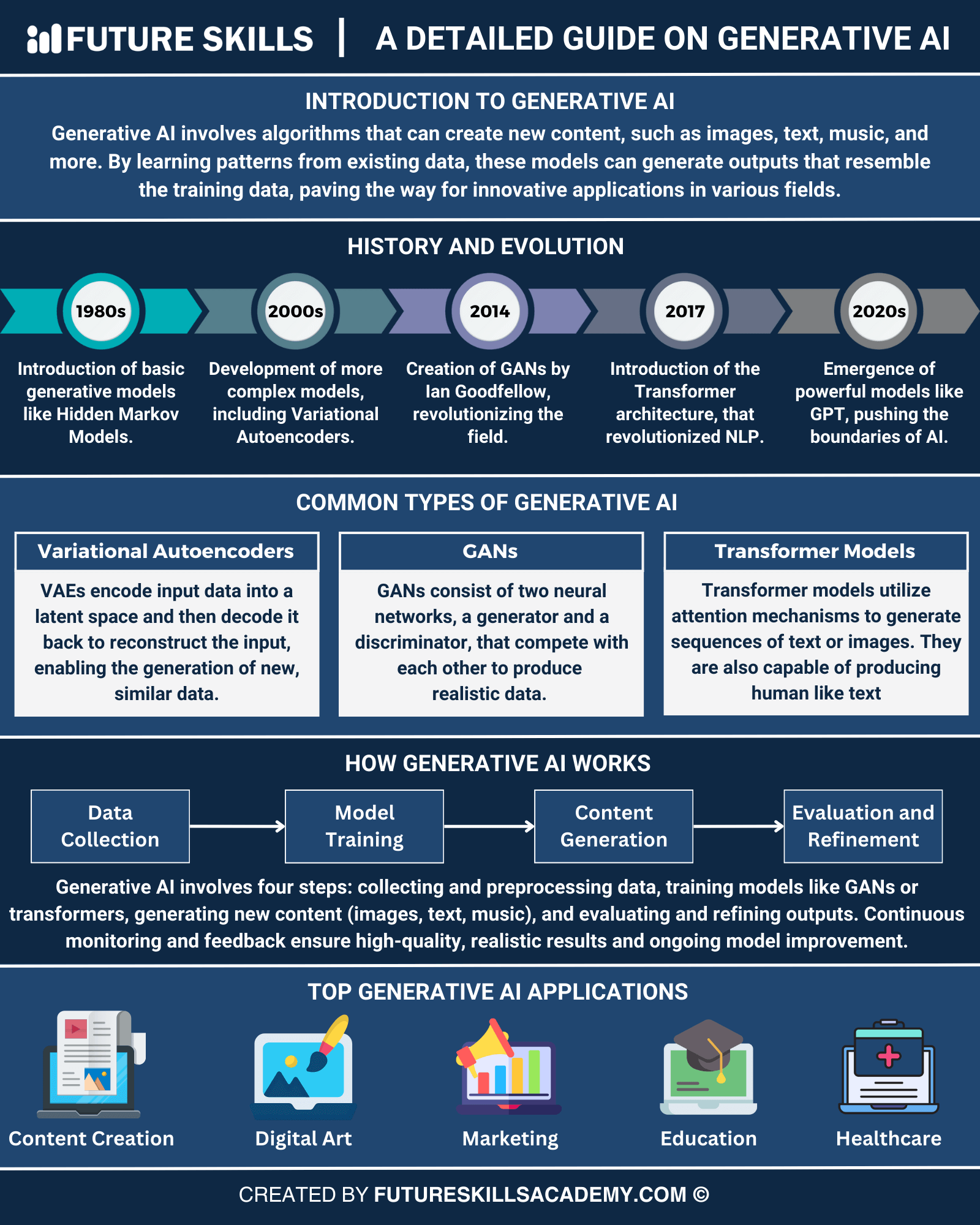

The first thing you need in a generative AI tutorial is the definition of generative AI. It is the name of a subdomain of AI machine learning technologies that have the capability to generate content as output for text prompts. The prompts for generative AI can include short and simple queries or long and complex questions for AI models.

Different types of generative AI tools can generate new images, videos, and audio content. However, text-centric conversational AI tools have gained more momentum. The text-trained generative AI models can help people engage in direct conversations and learn from the models, just like interacting with humans.

Generative AI caught the attention of the whole world within a few months after the introduction of ChatGPT. It was based on the GPT-3.5 neural network model of OpenAI. Many other conversational chatbots had arrived earlier, including the ELIZA model by the Massachusetts Institute of Technology in mid-1960s. Before you learn about modern generative AI tools, you should know that most of the earlier AI systems were rule-based. The AI models lacked contextual understanding and offered limited responses according to a set of predefined templates and rules.

Generative AI has the capability to learn from existing data sources to come up with realistic and new artifacts. The new outputs by generative AI applications reflect the traits of the training data. Most of the generative AI examples, such as ChatGPT, DALL-E, or Gemini, have the features required for generating different types of new content.

For example, you can create new videos, music, images, text, product designs, and software code with generative AI. Generative AI utilizes different techniques that go through continuous development. The best examples point to AI foundation models trained with a broad collection of unlabeled data.

Level up your ChatGPT skills and kickstart your journey towards superhuman capabilities with Free ChatGPT and AI Fundamental Course.

Working Mechanism of Generative AI

Generative AI is a vital highlight in the modern technological landscape. It is important to understand “how does generative AI work” to make the most of its potential. You can find out more about the working mechanism of generative AI through two distinct approaches. Neural network implementations have been designed to work exactly like humans, and the models are iterated repetitively to improve their performance. On the other hand, it is also important to consider that there is no clear explanation for the working of generative AI.

The core element in the working of generative AI algorithms is deep learning or deep neural networks. It is an important subdomain of machine learning. The generative AI process begins by feeding large amounts of data to a large language model. The pre-training dataset content can include books, company information, or web pages, according to the type of information you want to generate. You can understand generative AI working mechanisms by diving deeper into the ways in which Large Language Models use transformers. Transformers work by transforming sentences and data sequences to create numerical representations or vector embeddings.

After converting input data into vectors, the generative AI model can convert it into vectors. As a result, generative AI models can classify and organize the data according to the extent of similarity to existing vectors in the vector set. It helps determine the relationship between the words. With effective vectorization, you can determine the capability of a model for producing output in alignment with the training data. If the model has to generate sensible results, then the data must pass through different computational processing tasks.

The simplest words for explaining the way generative AI works suggest that it uses neural networks for identification of patterns and structures in existing data. The most important requirement to learn generative AI and how it works is understanding how they use different learning approaches.

Generative AI models utilize semi-supervised and unsupervised learning to train the models. It has helped organizations make the most of massive volumes of unlabeled data to generate foundation models. Subsequently, the foundation models can be trained to accomplish multiple tasks. Some examples of foundation models include Stable Diffusion and GPT-3, which help users make the most of language. For example, ChatGPT leverages the power of GPT-3.

Become a certified ChatGPT expert and learn how to utilize the potential of ChatGPT that will open new career paths for you. Enroll in Certified ChatGPT Professional (CCGP)™ Certification.

How Can You Identify Successful Generative AI Models?

The next noticeable highlight in a discussion around generative AI draws the limelight on the traits of successful generative AI models. A comprehensive generative AI tutorial would be incomplete without an outline of the important traits of successful generative AI models. You can identify a successful generative AI model by following the following characteristics:

-

Diversity

An effective generative AI model should be able to capture the minority modes in data distribution without compromising generation quality. It can help in reducing unprecedented biases in the trained models.

-

Quality

The review of generative AI examples must also focus on the aspect of quality. Applications that can support direct interactions with users need high-quality output generation. For example, poor speech quality can be an ambiguous output in speech generation. In the case of image generation, the output must be visually different from natural images.

-

Speed

Most interactive applications utilizing generative AI need a fast generation of outputs. For example, real-time image editing can become a valuable addition to content creation workflows.

Enroll now in the AI for Business Course to understand the role and benefits of AI in business and the integration of AI in business.

Types of Generative AI Models

Generative AI includes a broad collection of applications that leverage a rich collection of neural networks. Most of the generative AI systems fit the description provided in the ‘How does generative AI work’ section, albeit with different implementation techniques. You can find different implementation techniques of generative AI to support different types of content. Neural network models utilize repetitive patterns of artificial neurons alongside the interconnections between them. The discovery of new architectures has been driving new improvements in the field of AI innovation.

Two of the earliest examples of neural network architectures used for generative AI include recurrent neural networks and convoluted neural networks. Let us learn what they have to offer to generative AI functionalities in the following sections.

-

Recurrent Neural Networks

Recurrent Neural Networks, or RNNs, arrived in the mid-1980s and are still useful in the domain of generative AI. RNNs promoted many generative AI tools by demonstrating the capability of AI to learn and automate tasks that utilize sequential data. Sequential data could be any type of data whose sequence includes meaning, such as stock market behavior or language.

RNNs are the driving force behind many of the audio AI models that help in generating new music. At the same time, they also exhibit efficiency in natural language processing. Recurrent Neural Networks are useful for traditional AI functions, such as speech recognition, financial predictions, and handwriting analysis.

-

Convolutional Neural Networks

Convolutional or Convoluted Neural Networks are the latest iterations of deep learning models. The working mechanism of CNNs emphasizes a grid-like data model and serves the best results for spatial data representations. CNNs have the ability to generate pictures. Some of the popular generative AI apps to create images from text, such as DALL-E and Midjourney, utilize CNNs for image generation.

-

Transformer Models

RNNs might have been the preferred choice among generative AI models. However, the efforts to improve Recurrent Neural Networks led to the introduction of transformer models. Transformer models have emerged as a powerful and flexible alternative for representation of sequences.

In addition, anyone who wants to learn generative AI must emphasize transformer models and their traits. The distinct traits of transformer models help them with processing of sequential data, including text, in a parallel way. The parallel processing of sequential data helps ChatGPT offer quick responses.

Furthermore, open-source efforts, research, and investments by private industry have led to the development of useful models capable of innovation at the highest levels of neural network architectures. Some of the most popular generative AI model innovations include the following.

-

Variational Autoencoders

Variational Autoencoders, or VAEs, leverage innovation in training processes and neural network architectures. The common generative AI examples of using VAEs point to image-generating apps. The models feature encoder and decoder networks with their distinct architectures.

-

Generative Adversarial Networks

Generative Adversarial Networks or GANs are implemented in different modalities, albeit with a special inclination towards video and image-related tasks. GANs are different from other models as they include two neural networks competing against each other during the training process. For example, GANs used for image generation have a generator that creates the image, and a discriminator checks whether the image is real or artificial.

-

Diffusion Models

The next important variant of models in a generative AI tutorial draws attention towards diffusion models. Diffusion models utilize multiple neural networks in one framework, with integration of different architectures. Diffusion models can learn through compression of data, adding noise, removing the noise, and making attempts to regenerate original data. The most popular example, i.e., Stable Diffusion, utilizes a VAE encoder and decoder alongside two CNN variants in the noising and denoising steps.

Excited to understand the crucial requirements for developing responsible AI and the implications of privacy and security in AI, Enroll now in the Ethics of Artificial Intelligence (AI) Course

What are the Use Cases of Generative AI?

Generative AI has the capability to complete automation or speed up a diverse collection of tasks. Businesses must plan innovative and specific approaches to maximize the benefits they can gain from generative AI. Here are the most notable use cases of generative AI.

- Generative AI tools can use simple, chat-based interfaces to answer general or work-specific questions.

- Generative AI tools can also search through any type of text and identify mistakes alongside offering suggestions for improvements.

- Another important example of generative AI working for the benefit of businesses and users points to improvements in communication.

- Generative AI can also serve as a helpful tool for reducing the burden of administrative work by automating complex tasks.

- Software developers and engineers can also use generative AI models for troubleshooting and fine-tuning code at better speed with more reliability.

Does Generative AI Have Any Limitations?

Yes, generative AI does not come free of setbacks. The notable limitations associated with generative AI include the need for oversight and expensive computational power. On top of it, businesses have to develop their specialized models rather than rely on public generative AI tools. Most importantly, the use of generative AI can invite resistance from the workforce as well as customers. Therefore, it is important to address the limitations of generative AI to ensure its broader adoption.

Final Words

The discussions about generative AI and its working mechanisms show that it works like the human brain. The simplest explanation for ‘how does generative AI work’ suggests that generative AI trains on massive datasets to identify patterns and structures. Subsequently, generative AI models use their observations to generate new content that reflects the dataset they use for training. Generative AI benefits productivity and enhances workflow in different business operations. On the other hand, generative AI also poses some limitations. Learn more about generative AI and find the best ways to implement it in practice right away.