Generative AI has become one of the most popular trends underlying the growing adoption of artificial intelligence. The release and evolution of new Large Language Models or LLMs have fuelled the rise of enhanced possibilities for using AI in different applications. Have you ever wondered how LLMs became better at understanding and responding to user queries? The answer points at the top prompt engineering techniques that help LLMs understand the instructions better and deliver desired outputs.

Prompt engineering is the art and science of making language models work to generate the expected results. However, prompt engineering does not involve creating random instructions that you think the LLM would understand. It is important to understand the capabilities of LLMs and create prompts that can support effective communication of goals. Let us find out more about the most important prompt engineering techniques and their advantages.

Learn prompt engineering to create effective prompts and boost productivity with Certified Prompt Engineering Expert (CPEE)™ Certification.

What are Prompt Engineering Techniques?

Prompt engineering involves the creation of meaningful instructions for generative AI models so that they can generate better responses. The answer to queries such as “What is a prompt engineering technique?” revolves primarily around understanding how you can choose specific words and add more context.

Large language models can generate large amounts of data which can be fake or biased. Therefore, prompt engineering techniques are the trusted tools to guide LLMs toward the right responses. Prompt engineering techniques can help you capitalize on a broad range of possibilities with LLMs. For example, you can use a mix of prompting techniques to generate news articles with a specific tone and style.

Most Popular Prompt Engineering Techniques You Should Try

Prompt engineering helps you with the effective design of prompts and improving prompts to achieve better results for different LLM tasks. You would need the best prompt engineering techniques to move beyond the basic examples of prompts for LLMs used for simple tasks. Here are some of the most notable prompt engineering techniques that you can use to guide LLMs for complex tasks.

-

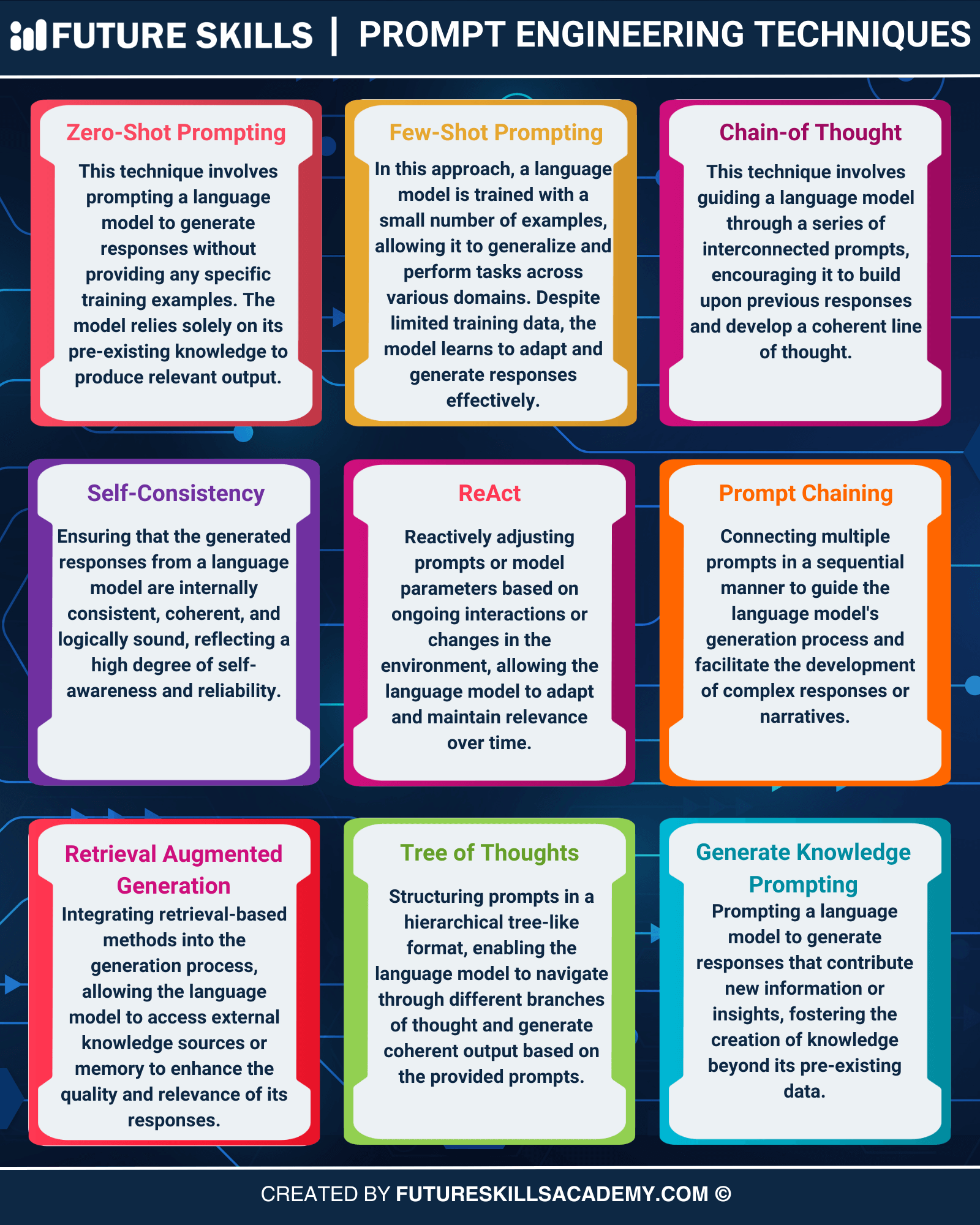

Zero-Shot Prompting

Before you find out more about the advanced prompt engineering techniques, you must learn about zero-shot prompting. It is the most fundamental prompting technique that does not require any specific training examples. In the case of zero-shot prompting, the model would have to depend solely on its pre-existing training data to generate outputs.

Large Language Models such as GPT-4 have been trained to follow instructions according to the training datasets. The large-scale training ensures that the LLMs can perform tasks through the zero-shot approach. Zero-shot prompts guide LLMs to perform specific tasks without providing additional examples. Interestingly, instruction tuning has proved effective in improving zero-shot learning as it involves fine-tuning models on specific datasets through instructions.

Reinforcement Learning from Human Feedback, or RLHF, is also a recommended tool for scaling instruction tuning. Although zero-shot prompting is not one of the best prompt engineering techniques for complex tasks, tuning can help align the LLM with human preferences. As a matter of fact, models such as ChatGPT use such techniques to their advantage when adapting to new tasks.

Enroll in our Certified ChatGPT Professional (CCGP)™ program and become a professional by learning from industry experts.

-

Few-Shot Prompting

Few-shot prompting is useful in situations where zero-shot prompting fails. LLMs showcase zero-shot capabilities by default. However, they can fail to address complex tasks with the zero-shot setting. Therefore, you can rely on few-shot prompting as a trusted technique for in-context learning.

The technique involves providing demonstrations or examples in the prompt to guide the model towards better performance. Few-shot prompting is one of the top prompt engineering techniques, where the examples in prompts can help with conditioning and generating the desired responses. As a matter of fact, few-shot properties emerged for the first time in situations where models must be scaled for specific tasks.

It is also important to follow some important recommendations to shape the examples during few-shot prompting. For example, you must use a specific format, even with random labels, which may be better than using no labels. Few-shot prompting also becomes more effective with the use of label space and distribution of input text. However, few-shot prompting also has some limitations, particularly for complex reasoning tasks.

-

Chain of Thought Prompting

The next important addition among prompt engineering techniques is Chain of Thought prompting. Your search for answers to “What is a prompt engineering technique?” in the case of complex reasoning tasks would lead you to Chain of Thought prompting. It has complex reasoning capabilities with the help of intermediate reasoning steps.

Users can also combine chain-of-thought prompting with few-shot prompting to improve the results for complex tasks. You can find two notable variants of Chain of Thought prompting: zero-shot Chain of Thought prompting and automatic Chain of Thought prompting.

Zero-shot Chain of Thought prompting focuses on adding a step-by-step thinking approach to the original prompt. Automatic Chain of Thought prompting leverages an automatic process to generate reasoning chains. The automatic Chain of Thought prompting approach involves two distinct steps: question clustering and demonstration sampling. Question clustering involves partitioning questions of a specific dataset into different clusters. In the next step, demonstration sampling involves selection of a representative question from every cluster and generating the reasoning chain.

-

Self-Consistency

Another advanced technique for prompt engineering draws attention towards self-consistency. It focuses on replacing the naïve greedy decoding that is evident in Chain of Thought prompting. As one of the most advanced prompt engineering techniques, self-consistency involves sampling different reasoning paths with diverse traits by leveraging a few-shot Chain of Thought prompting. Subsequently, it involves using the generations to choose answers with the best consistency. It helps in boosting the performance of Chain of Thought prompting for tasks that involve common reasoning and arithmetic operations.

-

ReAct

The list of top prompt engineering techniques also includes ReAct, which is a trusted framework for generation of task-specific actions and reasoning traces. Reasoning traces are important tools for helping the model inducing, tracking, and updating action plans alongside ensuring exception management. On the other hand, task-specific actions help in interfacing and gathering data from external sources such as environments or knowledge bases.

ReAct framework can help LLMs interact with external tools to retrieve additional information, thereby generating factual and reliable responses. It is also important to note that ReAct can perform better than many state-of-the-art benchmarks on decision-making and language-processing tasks.

-

Prompt Chaining

Prompt chaining is a trusted prompt engineering technique that you can use in diverse scenarios that require different transformations. One of the common use cases of LLMs focuses on answering questions about the information in a large text document. It is one of the best prompt engineering techniques that involves designing two different prompts. The first prompt focuses on extraction of relevant quotes from the text document for the answer. The second prompt takes the quotes and the text document as inputs to answer a specific question.

You can notice that prompt chaining involves breaking tasks into different subtasks. After identification of the subtasks, the LLM can be prompted with a subtask, and then you can use the response as input for another prompt. Prompt chaining is a useful prompting technique for complex tasks in which LLMs might struggle with detailed prompts.

-

Retrieval Augmented Generation

Retrieval Augmented Generation, or RAG, is a useful prompting technique for knowledge-intensive tasks. It works by combining a text generator model with an information retrieval component. You can fine-tune RAG and modify its internal knowledge efficiently without retraining the whole model.

The RAG prompting technique leverages an input and extracts a collection of supporting documents from a specific source. Subsequently, it involves attaching the documents to each other to develop a context with the input prompt. In the next step, the documents serve as inputs for the text generator that creates the final output. Therefore, RAG is useful in applications in which facts could evolve with the passage of time.

-

Tree of Thoughts

The traditional prompting techniques might not work for complex tasks that need strategic oversight and discovery. The Tree of Thoughts prompting is one of the advanced prompt engineering techniques that can help with such tasks. It is a framework that involves a generalization of a Chain of Thought prompting alongside exploring thoughts that work as intermediate steps to solve general problems with language models.

The Tree of Thoughts prompting technique involves maintaining a tree of thoughts in which thoughts are coherent language sequences. It is a useful approach to help language models with self-evaluation of progress through intermediate thoughts tailored to solve a problem.

-

Generated Knowledge Prompting

The responses to queries like “What is a prompt engineering technique?” also invite attention to examples like generated knowledge prompting. LLMs must go through continuous improvement. The generated knowledge prompting technique involves incorporating knowledge or data that can help the model in making predictions with better accuracy. It operates on the idea of generating knowledge with a model before it makes predictions.

LLMs can have limitations when working on tasks that require more knowledge regarding the world. The generated knowledge prompting technique involves generating knowledge samples. Subsequently, it involves integration of the knowledge in the LLM to obtain a reliable prediction. In simple words, it involves prompting language models to come up with responses that add new insights or information to the model. Generated knowledge prompting encourages the generation of knowledge beyond pre-existing data collections.

Explore the best career path in AI and understand how AI works for different industries with our accredited Certified AI Professional (CAIP)™ Certification.

Final Words

The review of the most popular prompt engineering techniques provides a comprehensive explanation of each technique. You can notice that the top prompt engineering techniques have distinct advantages. For example, zero-shot prompting is inherently available with large language models. On the other hand, advanced prompting techniques such as Chain of Thought prompting, Generated Knowledge prompting, and Tree of Thoughts prompting are useful for complex tasks. Explore the best prompting techniques that you should use for your LLMs now.