Generative AI tools such as ChatGPT and Google Gemini have become the main forces behind the AI revolution. With the help of these tools, artificial intelligence has become a part of people’s everyday lives and business operations. They can help you write assignments, create automated workflows, and prepare business presentations. However, you would need prompt engineering to obtain the desired results from AI models.

Prompt engineering is a valuable skill that can help you land up with jobs as an AI professional. On the other hand, doubts such as “How to perfect prompt engineering?” might create apprehensions in the minds of beginners. Prompt engineers can serve as valuable assets for the growth of the AI landscape and get numerous rewards. Let us learn some important tips and tricks for prompt engineering.

Do you want to build a bright career in AI? Get started with our Certified Prompt Engineering Expert (CPEE)™ Course and become a job-ready prompt engineering expert.

Why Should You Learn Prompt Engineering Tricks?

Prompt engineering is important for artificial intelligence as it can influence the utility and performance of language models. It helps in harnessing the potential of generative AI tools as the quality of their input determines the accuracy and contextual relevance of their responses. Individuals skilled in best practices for prompt engineering can solve problems, unravel new insights, and come up with new ideas.

Prompt engineering can help in improving the accuracy of precise answers from AI and better capabilities for solving complex tasks. In addition, prompt engineering also supports enhancements in the user experience of AI systems. Most important of all, prompt engineering skills help you become a contributor to innovation in the domain of AI.

What are the Most Popular Prompt Engineering Tips?

You can become a prompt engineering expert by leveraging some tips for achieving an additional edge in the AI landscape. It is important to note that prompt engineering is continuously evolving with new innovative advancements. Therefore, prompt engineering professionals must follow specific best practices to get the best results from AI models every time. Here are some of the most valuable tips and tricks you must try to enhance your prompt engineering expertise.

-

Comprehensive Analysis

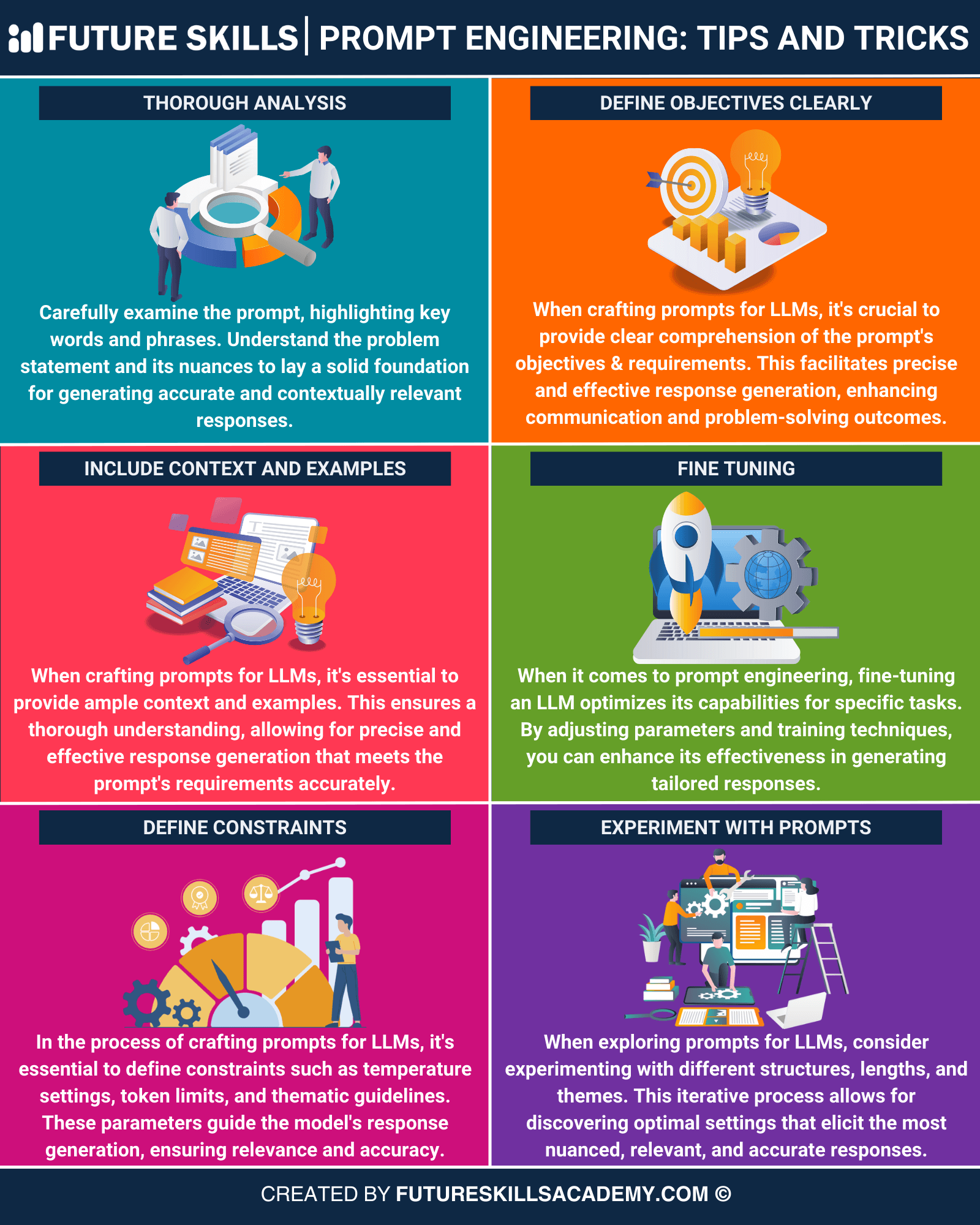

The best way to create perfect prompts involves comprehensive analysis. You might wonder why prompt engineering tips and tricks are important tools for businesses. What should you evaluate before beginning a prompt engineering project? First of all, you need to develop a better understanding of the problem statement alongside the crucial nuances. You must know the problem you are trying to solve with the prompt to come up with the ideal solutions.

Awareness of the problem helps you identify important nuances that can help you build a solid foundation for prompt engineering. Does your work end with research on the problem? Your research on the problem at hand will help you create an effective problem statement that will include keywords and phrases. Comprehensive analysis supports careful examination of the prompts to ensure the generation of contextually relevant and accurate responses.

-

Clear Definition of Objectives

The task of creating effective prompts for LLMs is a challenging one. Most of the time, you would have to experiment with uncertainty on a wide range of prompt combinations to find the best pick that would help you get the desired results. However, prompt engineering best practices suggest that you can fight against uncertainty with specificity.

You should have a clear set of requirements and objectives for the prompt to ensure that you know what it will generate. Clear objectives in prompt engineering lead to the generation of accurate and effective responses. Furthermore, specificity in the objectives also helps in improving the problem-solving results and communication.

Ambiguity in the prompt can affect the accuracy and relevance of information extracted from an AI during writing prompts. Specificity in prompts helps the AI model understand the nuance and context of the request, thereby preventing it from generating irrelevant or generalized responses. You can establish a clear definition of objectives in the prompt by including relevant details without stuffing the AI with unnecessary information.

The balance between addition of relevant details and irrelevant information can prevent the concerns of misinterpretation. Tailoring your prompts for the desired outcome can help you navigate the uncertainty associated with prompt engineering goals. The responses for “How to perfect prompt engineering?” draw attention to elements that make your prompt engineering goals more specific. Some of the important aspects that help you create specific prompt engineering goals include the detailed context, output format, output length, tone, and style you want in the output.

AI can save a lot of time and money for businesses. Enroll in this comprehensive AI for Business Course and easily overcome the challenges of implementing AI in your business.

-

Leveraging the Ideal Context and Examples

Providing the ideal context and examples of prompts can guide the LLM in generating the desired output. Without awareness of the context, a prompt is most likely to come up with generic, biased, or hallucinated responses. For example, if you ask, ‘What is the ideal roadmap to success?’ in the prompt, the LLM would generate generalized responses about achieving success.

On the other hand, a prompt like ‘What is the ideal roadmap to success as a prompt engineer?’ would generate responses about the steps you must follow to become a successful prompt engineer. The best practices for prompt engineering would be incomplete without mentioning the significance of detailed contexts for prompts. Context serves as background information to the LLM so that it can understand the scenario for which you need a response.

The context of a prompt can include the subject matter and scope of the prompt alongside other relevant constraints. Adding specific and relevant data to the prompts ensures significant improvements in the quality of AI-generated responses. For example, data that includes numerical values, categories, and dates can serve applications that involve decision-making and detailed analysis.

Apart from providing context to the data, it is also important to cite the source to improve the clarity and credibility of the concerned task. Remember that you can enrich the context for your prompt with well-structured and updated information.

One of the common prompt engineering tips and tricks draws attention to the use of examples. Examples set a clear expectation about the desired information type or response you want. You can rely the most on examples in situations where you have to deal with complex tasks or creative tasks where you might have more than one answer.

It is important to remember that your examples must represent the style and quality of the desired result. Some of the common example types that you can use in prompt engineering include sample texts, code snippets, data formats, document templates, and examples of charts and graphs.

-

Fine Tuning LLMs

Fine-tuning LLMs is another trusted approach to achieving better results with prompt engineering. You can optimize the capabilities of an LLM to achieve specific tasks. Most of the answers to “How to perfect prompt engineering?” invite attention to fine-tuning LLMs with parameter adjustments and new training techniques.

In addition, you can also improve the effectiveness of LLMs for generating customized responses. The growing complexity of AI models invites the necessity for understanding their limitations and capabilities. With a clear understanding of the strengths and weaknesses of LLMs, you can avoid mistakes in fine-tuning.

The process of fine-tuning LLMs involves recognition of the model’s limitations. You can prompt AI models to work on specific tasks only if you know how to deal with setbacks such as AI hallucinations. For example, you cannot force an AI model to perform the tasks that it was not designed for. Some of the notable examples of LLM limitations include a lack of direct interaction with external databases, real-time data processing, and access to personal data.

-

Definition of Important Constraints

The process of creating effective prompts for LLMs involves definition of constraints. You can rely on prompt engineering best techniques and practices to identify important constraints, such as temperature settings, token limits, and thematic guidelines. The constraints help in guiding the response generation of models, thereby facilitating better accuracy and contextual relevance.

During the process of creating and testing prompts, you would come across some important constraints in LLM settings. The constraints can help improve the desirability and reliability of responses, and you can use experimentation to come up with the best settings for your tasks. Apart from temperature, some of the important constraints include max length, presence penalty, and frequency penalty.

-

Experimentation with Prompts

Another notable trick that delivers desired results in prompt engineering focuses on experimentation with prompts. You should experiment with different lengths, themes, and structures as you seek the best prompts for LLMs. The experimentation must follow an iterative process that would allow you to discover the optimal settings to obtain desired responses.

Experimentation helps you obtain the most accurate, advanced, and relevant responses from LLMs. Another important addition among best practices for prompt engineering recommends the use of different personas. You can try out work personas such as customer service executives, product managers, celebrities, or parental figures.

Become a professional in generating content using chatGPT by learning advanced ChatGPT and AI skills from industry experts with our unique ChatGPT and AI Fundamentals Course.

Final Words

The insights on tips and tricks in prompt engineering provide the ideal guidance to achieve desired results from LLMs. As the AI landscape grows with the arrival of new LLMs, prompt engineering best practices would spell the difference between successful and mediocre models. The best practices to create effective prompts involve comprehensive analysis, a clear definition of objectives, and the inclusion of context and examples. On top of that, you can also improve LLMs with fine-tuning and constraint specification. Discover more insights on prompt engineering and how to get the best results from it.