The amazing wonders achieved by large language models or LLMs have caught the attention of almost every industry and tech enthusiast in the world. You might have come across a broad range of applications of LLMs, ranging from AI assistants to speech recognition systems. One of the notable terms that has emerged alongside LLMs is prompt engineering, which draws attention towards the significance of prompts for LLMs.

The growing emphasis on soft prompting LLM techniques has created curiosity about soft prompts. It is important to note that soft prompts establish the foundations of prompt tuning in LLMs and offer various advantages. Let us learn more about soft prompts, their working mechanisms, applications, and benefits they offer with prompt tuning.

Level up your prompt engineering skills with our Certified Prompt Engineering Expert (CPEE)™ Course and build a bright career in AI.

Understanding the Meaning of Soft Prompts

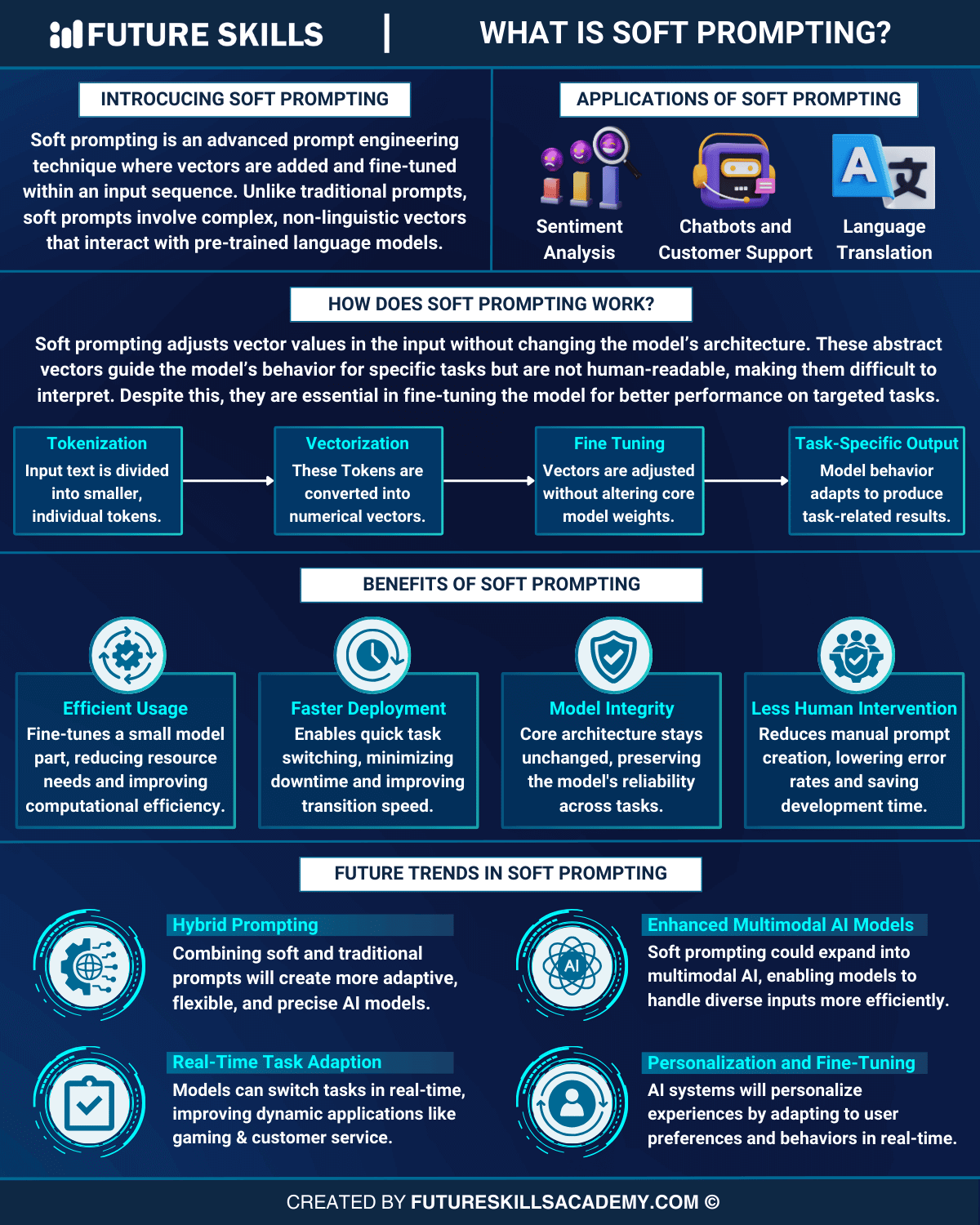

The first thing that comes to mind when you hear the word ‘prompt’ is a set of text-based instructions for generative AI models or LLMs. You can also find other sophisticated and powerful prompts that are somehow different from the prompts used in LLMs. Soft prompts represent a unique concept that revolves around adding vectors to an input sequence, followed by fine-tuning the vectors. During the process of soft prompting, the remaining components of the pre-trained model stay unchanged.

Any soft prompting guide will help you understand that you must adjust the vectors with static pre-trained weights to create soft prompts. The new input sequence including fine-tuned vectors would guide the behavior of the model for a specific task. One of the distinctive highlights of soft prompts is the design that involves abstract and random vectors which are difficult for humans to understand.

Soft prompts are significantly different from traditional prompts that offer clear instructions in human-readable language. The vectors used in soft prompts don’t have a direct semantic or linguistic connection to the concerned task. Even if the vectors are responsible for guiding the behavior of language models, the non-linguistic nature creates difficulties in understanding them.

Identifying the Relationship between Soft Prompts and Prompt Tuning

You can identify one of the most notable implications of soft prompts for generative AI in the form of prompt tuning. It is a technique tailored for enhancing the performance of pre-trained language models without modifications in its core architecture. Any soft prompting example will help you understand how soft prompts can be used for prompt tuning. Prompt tuning focuses on adjusting the prompts that would guide the response of the model without modifications in the deep structural weights.

You can notice a clear similarity between the definitions of prompt tuning and soft prompts. Prompt tuning describes soft prompts as a collection of customizable parameters introduced in the beginning of the input sequence. Soft prompting empowers prompt tuning with the flexibility to use the same foundation model for different tasks by introducing task-centric prompts.

Explanation for Working of Soft Prompts

The best way to understand soft prompting would focus on learning about the working mechanism of soft prompts. You can discover the fundamental idea for soft prompting by examining the approach that a model follows to understand prompts. The first step involves breaking down a prompt into individual tokens.

The next step in the creation of soft prompts in LLM involves converting the individual tokens into vectors with values. You can think of the vectors as model parameters. Subsequently, you can adjust or train the model to become more specific by making the necessary changes in values. After you change the weights, the token vectors would no longer be the same as their vocabulary-based meanings, which makes soft prompts difficult to understand.

Understand the concept of Least to Most Prompting in detail and how it converts complex problems into simpler sub-problems.

How is a Soft Prompt Different from Regular Prompts?

Another crucial aspect in any guide to soft prompting is the difference between soft prompts and regular prompts. One of the notable differences between them is that soft prompts are not human-readable. Let us uncover some other aspects that separate soft prompts from the regular prompts used in LLMs.

-

Flexibility

The process of creating regular prompts involves the need to focus carefully on the specific task to achieve the desired results. Soft prompts don’t require any special consideration as they are flexible and you can modify them for different tasks. It is important to remember that you can modify soft prompts without completely changing the model.

-

Working Approach

You can also use questions like ‘How to do soft prompting?’ to compare soft prompts with regular prompts. The working approach of regular prompts involves providing specific input to the model for which the model would generate output by using its contextual understanding and pre-existing knowledge. Soft prompts work in a different way as they require modifications only in the prompt rather than in the core knowledge of the model. The working approach of soft prompts focuses on fine-tuning the prompt instead of the complete model.

-

Token Length

The difference between soft prompts and regular prompts is also visible in the token length. Regular prompts can be alarmingly long, particular in the case of complex tasks. On the contrary, soft prompts use a limited number of words when they work on multiple tasks with one model. The precision of token length in soft prompts ensures better performance alongside easier management of tasks.

-

Task Adaptability

Regular prompts are designed with specific tasks in mind, thereby implying that you need unique prompts or even different models for different tasks. Soft prompts are comparatively simpler as they can adapt to multiple tasks. The flexibility for prompt tuning with soft prompts enables you to use the same model for different tasks. Soft prompts can help you switch between tasks in a single model without disruptions.

Discovering the Applications of Soft Prompting

The most noticeable application of soft prompting revolves around ensuring easier multi-task learning. Traditional prompting methods require separate modifications for various tasks. On the other hand, soft prompts can help one model switch effortlessly between different tasks, only by changing prompts. The soft prompting method plays a crucial role in reducing the time and resources required for model training alongside keeping the existing knowledge intact.

You can find a wide range of soft prompting LLM applications, such as sentiment analysis and language translation. Soft prompting can also introduce better performance in question answering tasks and text summarization. It is also important to remember that soft prompts offer a special advantage in chatbots and conversational agents. Chatbots and conversational assistants can make the most of the flexibility of soft prompts to customize their responses according to different styles and personalities.

Does Soft Prompting Bring Any Benefits to the Table?

The applications of soft prompting provide a glimpse of the advantages it can introduce in the functionality of LLMs. Soft prompting supports prompt tuning that can play a vital role in optimization of large language models. The following benefits of soft prompting can help you understand its significance in the domain of LLMs.

-

Efficient Use of Resources

The foremost advantage listed in any soft prompting guide would be the efficient use of resources. Prompt tuning helps in maintaining the parameters of the pre-trained model as they are, thereby reducing the computational power requirements. The efficient use of resources is crucial for environments with limited resources, where soft prompts can enable sophisticated model use without increasing costs. You can also freeze certain model parameters when the average size of the foundation model increases, thereby preventing the need to deploy separate models for different tasks.

-

Limited Human Intervention

Prompt tuning does not require human intervention as much as prompt engineering. You don’t have to create prompts for specific tasks that may lead to errors and take up a lot of valuable time. The flexibility for automatic optimization of soft prompts in the training process helps in enhancing efficiency and reducing human errors.

-

Model Integrity

One of the interesting aspects of using soft prompts in LLM is the assurance of model integrity. You don’t have to modify the core architecture or weights of a model with soft prompting. As a result, you can present the original knowledge and capabilities of the pre-trained model. It serves a vital role in maintaining the reliability of the model in different types of applications.

-

Faster Deployment

Prompt tuning with soft prompts is different from comprehensive fine-tuning as prompt tuning involves modification of a small collection of soft prompt parameters. It helps in ensuring a faster adaptation process, thereby facilitating faster transitions between different tasks with significantly lower downtime.

Transform your business by learning how to integrate AI into it with our AI for Business Course. Enroll now!

Final Thoughts

The insights on soft prompting showcase the process as a groundbreaking revolution in the AI landscape. It offers a dynamic approach to training LLMs for different tasks, thereby expanding the scope of utility of language models. You can find the advantage of flexibility in every soft prompting example which is considerably different from regular prompts. Soft prompts offer flexibility for adjusting the prompt parameters without sacrificing the original capabilities and knowledge of the pre-trained model.

Soft prompting can help in creating models that could perform multiple tasks efficiently. It would reduce the need to create new models for different tasks and the extensive fine-tuning procedures to achieve optimal results. Discover more useful insights on soft prompting with credible resources now.