Artificial intelligence is more than just a new technology that you can use to improve efficiency in business operations. It has changed the way we live and work, and it has also shaped new approaches to interaction with technology. If you look for something that drives the magic behind AI systems, you are likely to end up with LLMs.

Large Language Models or LLMs are the answer to all questions about the ability of machines to learn, understand, and generate answers in natural language. The fundamentals of zero-shot prompting explained for beginners emphasize the importance of prompting for LLMs.

Large Language Models can interact effectively with users by using prompting that involves providing specific cues or instructions. The prompts can direct LLMs to generate contextual and coherent responses. Let us learn more about zero-shot prompting, its advantages, and how it is different from few-shot prompting.

Learn the best techniques of prompt engineering. Become a Certified Prompt Engineering Expert today!

How Do Large Language Models Work?

Interaction with a Large Language Model or LLM involves one overarching principle. The output of a language model is good only if the input is up to the mark. If you feed a poor input to the AI model, then you are more likely to limit the quality of the response. Therefore, it is important to learn about the workings of LLMs before you look for the answers to “What is zero-shot prompting?” and how it helps with prompting. LLMs such as GPT can generate text in natural language by using techniques such as autoregressive prediction. The massive training datasets help the LLMs ensure an effective understanding of different contexts and language patterns.

Upon receiving a prompt or an instruction, the language model leverages its training dataset to predict the next word according to the context. The model evaluates probabilities regarding the next token and utilizes the values to predict the next one, followed by repeating the process.

The process continues until the model generates a complete sentence or paragraph. In addition, the language model utilizes sophisticated algorithms to ensure contextually appropriate and coherent responses. Therefore, you need prompt engineering to create and design prompts that can help you get the best results from LLMs and generative AI systems.

Definition of Zero-shot Prompting

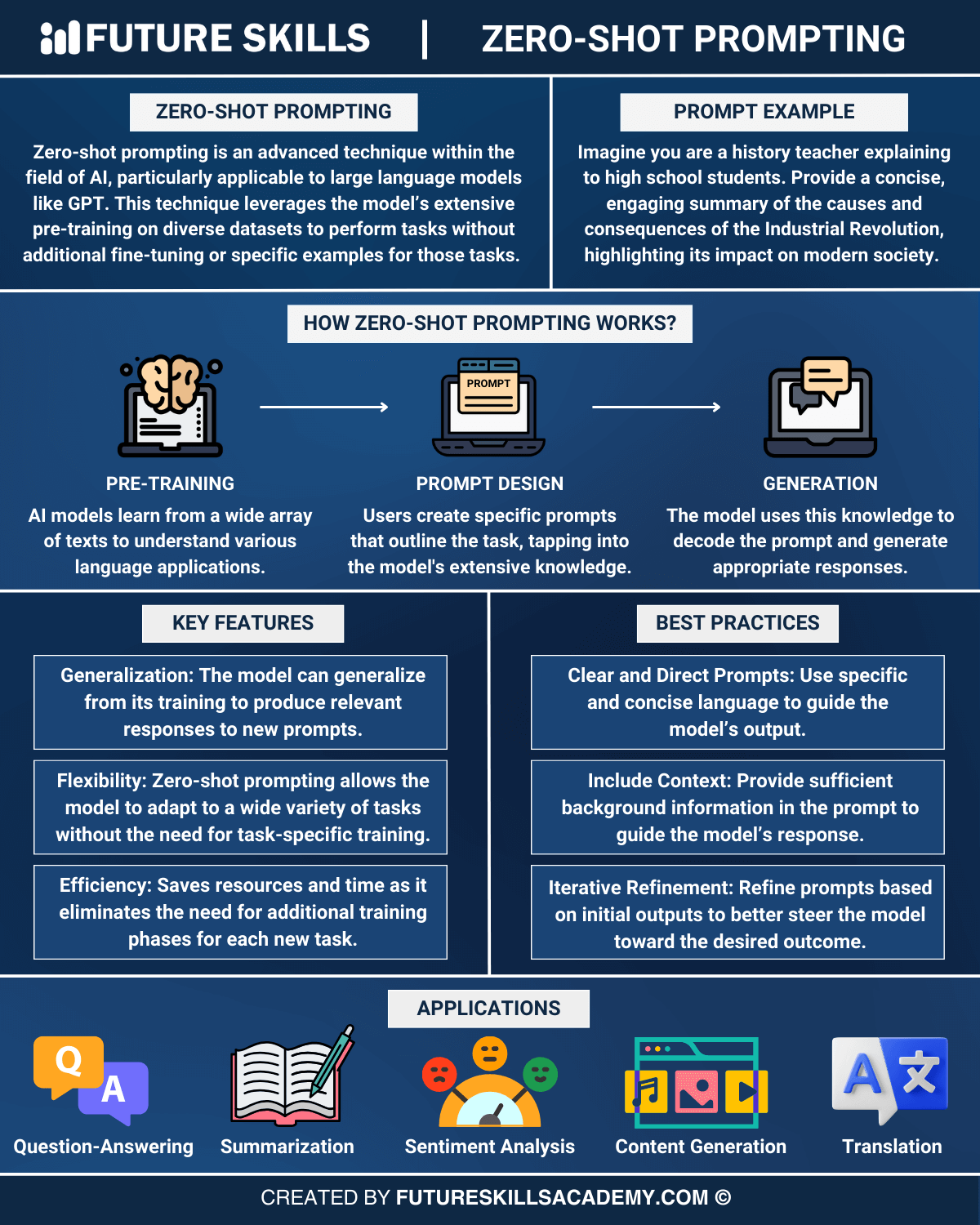

Zero-shot prompting is the most common prompting technique that helps AI models make predictions on new data without explicit training. The examples of zero-shot prompting ChatGPT uses in its operations show that zero-shot prompting is useful for empowering LLMs to address tasks for which they have not been trained.

Zero-shot prompting involves providing prompts to the model alongside a set of target tasks and labels without prior contextual knowledge. The model would then utilize the pre-trained knowledge alongside reasoning abilities to create relevant responses according to the specific prompt.

As compared to traditional machine learning methods, zero-shot prompting does not need large amounts of labeled data for the training process. On the contrary, zero-shot prompting empowers AI systems to understand and generate responses for new tasks based on general knowledge. The simplest zero-shot prompting examples can help you develop a better understanding of its working mechanism.

Imagine being asked to do something that you have never done before. On top of that, you don’t have any specific instructions or examples that you can follow to complete the task. On the contrary, you have to depend on what you know or your past experiences to find out how to do the task.

Working of Zero-Shot Prompting

The working mechanism of zero-shot prompting starts when an AI model receives an instruction or a prompt that describes the task it must perform. The prompt may include some context for the task, albeit without any specific training data or examples. In the next step, the AI model would use its pre-existing knowledge alongside an understanding of natural language to generate relevant responses or outputs.

As you learn zero-shot prompting, it is important to note that its working is more suitable for situations where it is impossible to acquire labeled data for every task. Zero-shot prompting is a major advancement in the domain of AI as it helps LLMs generalize and adapt to new tasks with minimal use of additional training or example data.

The working mechanism of zero-shot prompting invites attention to two specific ways in which LLMs generate responses to user queries. The two approaches include the embedding-based approach and the generative model-based approach. Any guide on zero-shot prompting explained for beginners would prioritize the distinct traits of each approach. Here is a brief overview of how zero-shot prompting works in different ways.

-

Embedding-based Approach

Embedding-based zero-shot prompting primarily emphasizes the semantic connections and underlying meaning associated with the prompt and the generated text. The model would use the semantic similarity between the prompt and desired output to come up with contextually relevant and coherent responses.

-

Generative Model-based Approach

The generative model-based approach is an important part of responses to ‘What is zero-shot prompting’ and how it works. According to the generative model-based approach, the language model works on generating text through conditioning of the prompt and the expected output. With the help of explicit constraints and instructions, the model would generate text that is useful for the desired task. The generative model-based approach offers the advantages of better precision and control over the generated output.

What are the Advantages of Zero-Shot Prompting?

Zero-shot prompting is one of the most powerful prompting techniques that empower generative AI models for general tasks. It can help you unlock the true potential of language models with the assurance of a broad range of benefits. Here are some of the most significant benefits of zero-shot prompting that you must know.

-

Versatility

The applications of zero-shot prompting ChatGPT brings to the world in different scenarios offer the advantage of versatility. Zero-shot prompting enables users to capitalize on pre-trained language models to work on different tasks without the need for specialized training. The versatility of zero-shot prompting enables faster experimentation and discovery through different domains, thereby encouraging innovation and growth of new applications.

-

Interpretability

Zero-shot prompting also ensures that the natural language prompts are more understandable and interpretable than conventional machine learning approaches. As a result, it can offer better trust and transparency in AI systems.

-

Cost-Effectiveness

The outline of zero-shot prompting examples also points out that it offers better cost-effectiveness. It does not require labeled training data for specific tasks or fine-tuning of models. Therefore, it does not require expensive data collection procedures or computational resources, ensuring that anyone can use zero-shot prompting.

-

Democratization of Language Models

Lowering the barriers to entry and dependence on specialized resources and data ensures that AI is accessible to everyone. Zero-shot prompting enables individuals, businesses, and small teams to utilize the power of LLMs for different tasks.

-

Faster Deployment of AI

The benefits of zero-shot prompting explained for professionals also invite attention to prospects for faster deployment of AI systems. Zero-shot prompting helps in faster deployment of AI models for new tasks with the help of specific prompts. Such type of agility is useful in scenarios where time is an essential resource. For example, zero-shot prompting is useful for prototyping, emerging business requirements, and proof-of-concept projects.

Example of Zero-Shot Prompting

The best way to understand the responses to “What is zero-shot prompting” is to consider an example. You can notice the best examples of zero-shot prompting in use cases that involve natural language processing. For example, you can use zero-shot prompting to generate reviews for a restaurant. In the case of traditional machine learning approaches, the model must train on a large dataset of reviews for the specific restaurant or similar restaurants.

On the other hand, zero-shot prompting enables generating reviews for restaurants that an AI model encounters for the first time. In addition, you can offer some type of context in the prompt to describe the type of output you need. Here is an example of a zero-shot prompt for a restaurant review generation task.

Write a positive review for an Indian restaurant with the best curry in the US.

How is Zero-Shot Prompting Different from Few-Shot Prompting?

Few-shot prompting involves the use of a small amount of task-specific data or examples for training LLMs. It is useful for situations where you have limited training data for a specific task compared to zero-shot prompting, which focuses more on speed and efficiency. The guides to learn zero-shot prompting also focus on the elements of adaptability and flexibility for managing diverse tasks and prompts. On the other hand, few-shot prompting emphasizes rapid experimentation for faster iteration and testing of ideas.

Enroll in our Certified AI Professional (CAIP)™ course to boost your career. The course is structured and crafted in a way that it can transform your productivity beyond expectations.

Final Words

The review of zero-shot prompting provides a clear impression of its effectiveness in encouraging the growth of AI. Zero-shot prompting is a trusted pick for prompting LLMs when you have to work with limited resources. Most of the zero-shot prompting examples reveal that it is a powerful technique for generating creative outputs from LLMs. It encourages AI systems to use their existing knowledge and context provided for a specific task to generate responses. Learn more about prompt engineering and how zero-shot prompting plays a vital role in the improvement of AI systems right away.