Artificial intelligence is an integral part of our lives and we can see it in almost everything from recommendation systems on ecommerce websites to virtual assistants at our homes. You might wonder about the magic that makes generative AI systems work according to your instructions. The secret to autonomous functioning of generative AI systems and language models is visible in prompt engineering. It is also important to note that prompt bias in AI can lead to various negative outcomes. Language models learn from data and possibilities of bias in the data or prompts can lead to bias in outputs. Let us learn about prompt bias, techniques for reducing prompt bias and the ethical practices for AI prompt engineering.

Unlock career boosting superpowers through our Prompt Engineering Certification Course and advance your career in AI.

Understanding the Meaning of Prompt Bias

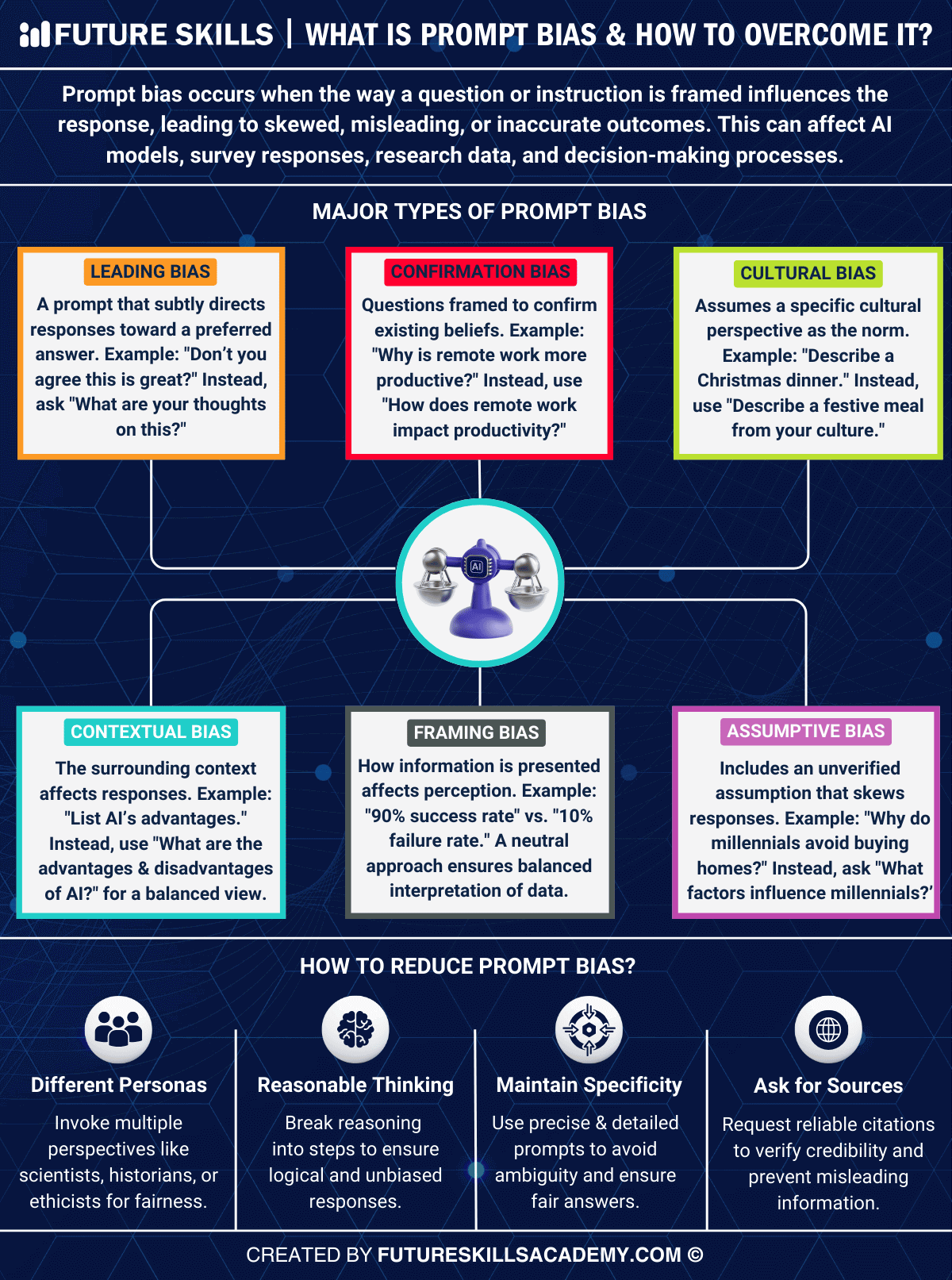

When you hear about bias in AI, you are likely to think about the biases that emerge due to issues in the training data. Bias may emerge in AI when an AI system favors certain groups or perspectives. On the contrary, prompt bias refers to the intention or unintentional bias in AI prompts that lead to biased responses. The subtle biases introduced during the phrasing of AI prompts or input for AI models can favor a specific perspective or assumption. Subsequently, the biases can lead to inequitable statements in the response of the AI model. Biases in prompt design can be of different types, aligning with gender, culture, political preferences, sexuality, socio-economic status or race.

You cannot expect fair AI responses from models when you use biased prompts. The most common example of a biased prompt is “Why are women weaker at sports?” as it would lead to biased responses. The threat of prompt bias is so strong that models capable of providing neutral answers might generate biased results. Apart from the structure and phrasing of prompts, the training data of an AI model also has a significant influence on AI bias.

Identifying Bias in Prompts

Generative AI systems and language models can generate biased outputs that could be potentially harmful. It is important to note that prompt bias can affect the performance of AI models for downstream tasks. You may find different strategies to refine prompts or choose advanced solutions such as filtering and moderation. The best approach for overcoming AI bias in prompt engineering revolves around identification of prompt bias. You can address the concerns of prompt bias by using different methods to identify whether your prompt is more likely to feature bias.

The first technique to identify prompt bias is through evaluation of words and phrasing in your prompt. You must evaluate the specific words that you have used in the prompt and identify whether the model follows a specific stereotype. Let us assume that you have asked for a ‘reliable software company’ in the prompt. The prompt is likely to generate outputs biased towards specific regions.

Another trusted technique to identify bias in prompts focuses on testing. You must try to run a specific prompt with different scenarios, cultural contexts or genders. You have to check whether the results of the prompt change with the different shifts introduced in the prompt. Any signs of changes in results will show that you might have a biased prompt.

Role of Prompt Engineering in Reducing AI Bias

You must also know about the impact of prompt engineering on reduction of AI bias to measure the threat of prompt bias. Careful prompt engineering can help in reducing AI biases by paying special attention to the details in the instructions for an AI model. The most useful trick to discover bias and reduce it revolves around best practices for writing better prompts. You can think of it as creating ‘fair-thinking’ prompts that can expose AI bias and empower you to deal with them.

-

Use Different Personas

Anyone can create ‘fair-thinking’ prompts by paying attention to subtle details of prompt designing. Fairness is the only solution to fight against bias in prompts and you can achieve it by creating prompts that encourage AI models to think reasonably. You can invoke different personas in prompt design that will help an AI model consider different perspectives.

-

Reasonable Thinking in Steps

It is important to know that you cannot push an AI model to think reasonably without breaking down the reasoning process. Most people assume that the working of AI systems is like a black box and there is no way to know what leads to the biased responses. On the contrary, you can create prompts that explain the instructions step-by-step that will generate unbiased and more logical results.

-

Maintain Specificity

One of the most promising bias mitigation techniques in prompt engineering is to ensure that the prompt is highly specific. You must know that AI models cannot make the same assumptions that you have when you create a prompt. Therefore, you must use your prompt to describe your idea of a realistic and fair response. Providing specific instructions is a trusted best practice in prompt engineering that will help you mitigate prompt bias.

-

Ask for Sources

You can also use prompts to deal with AI bias by asking the AI model to cite its sources. It is important to use prompts to ask the AI model to show the sources or links it used to find the information for responses. As a result, you can verify whether the sources are credible and represent diversity rather than following stereotypes.

Learn how to write precise ChatGPT prompts and enhance your productivity with our Certified ChatGPT Professional (CCGP)™ Course.

Addressing Prompt Bias with Prompt Debiasing

The trusted solution to deal with prompt bias is prompt debiasing. It relies on the use of specific methods to ensure that the responses of language models do not align with biases. You can use different strategies to fight against the biases that are inherent in the training data or emerge from prompt design. The notable prompt debiasing techniques include updating the few-shot examples and providing explicit instructions to the model for avoiding bias. Let us learn how you can use prompt debiasing techniques to combat prompt bias.

-

Example Debiasing

The distribution and order of examples in the prompt can lead to bias in outputs of language models. You must follow ethical AI practices to ensure appropriate distribution of examples in a prompt. Distribution refers to the number of examples from different classes that you have included in the prompt. Let us assume that you want to create a prompt for binary sentiment analysis on social media feedback for a brand. If you provide three positive examples and one negative example, then the distribution of examples will favor the positive feedback. Therefore, the model is likely to be biased for prediction of positive tweets.

Just like distribution of examples, the order of examples in a prompt also determines the likelihood of prompt bias. Prompts that feature a random ordering of examples is likely to perform better than prompts which have positive examples in the beginning. Random ordering of examples in a prompt ensures that the AI system does not prefer the ones that come first.

-

Instruction Debiasing

Another promising solution to prompt bias is explicit instructions for the language model to avoid all types of bias. For example, you can ask the prompt to treat people from all socioeconomic statuses, religions, races, nationalities, gender identities, sexual orientations, ages and physical appearances equally. You can also use the prompt to instruct the AI model not to make assumptions based on stereotypes when it does not have sufficient information.

Ethical Guidelines to Avoid Prompt Bias

Ethical guidelines in prompt design can also have a promising impact on the fight against prompt bias. Responses by AI systems have the power to affect the lives of people and can shape important decisions. Unethical or biased prompts can encourage discrimination or spread misinformation that may lead to more harmful consequences. Therefore, ethical prompt engineering is the only proven solution to achieve inclusivity, transparency and fairness in prompt design. Prompts tell how an AI model should generate outputs, thereby calling for ethical prompt design to avoid prompt bias.

The notable ethical guidelines that you should consider while creating prompts include inclusivity and transparency. It is important to ensure that your prompts do not discriminate against any group. When you ask AI models to generate content, the prompt must reflect a diverse audience. At the same time, prompt design must also be transparent and users must know the impact of their prompts on conversation with AI systems. The primary goal of ethical prompt design emphasizes accuracy, kindness and fairness in the output of AI models.

Enroll in our Ethics of AI Course and learn AI ethics with real-world examples and interactive exercises to gain practical expertise.

Final Thoughts

The negative consequences of prompt bias in AI can lead to setbacks for adoption of AI systems. Biased prompts can lead AI systems to generate output that may be discriminatory, offensive or harmful. It is important to fight against prompt bias by identifying how your prompt can be biased. You can also rely on best practices of prompt engineering and ethical practices to avoid bias in your prompts. Most important of all, prompt debiasing techniques provide the most effective solutions to biases in AI prompts. Learn more about prompt engineering to create the best prompts for AI systems.